Introduction

Optical character recognition (OCR) is a technology that enables computers to recognize text within images. OCR has a wide range of applications, from digitizing printed documents to extracting text from images for further processing. In this article, we explore the use of PaddleOCR and ONNX to perform OCR on images.

The image above shows an example of text extraction from eKTP (Indonesian National ID Card). Our OCR engine (PaddleOCR) is able to detect and extract text from the image, even when the text is upside down or skewed.

TL;DR: You can find the repository for this article at ruhyadi/vision-ocr. We provide a docker image for the API engine, so you can easily deploy it on your local machine.

PaddleOCR

Thanks to PaddleOCR, we can easily build a robust OCR engine that supports multiple languages. PaddleOCR provides pre-trained models for text detection, recognition, orientation classification and end-to-end OCR tasks. PaddleOCR is built on PaddlePaddle deep learning framework, think PyTorch but from China.

We tend to use PaddleOCR than MMOCR because PaddleOCR detect and recognize text per line rather than per word. Don’t get us wrong, MMOCR is a great library, you can easily learn OCR with MMOCR (they have great documentation), but for our use case, we prefer PaddleOCR. By the way, PaddleOCR also have great book in pdf, please take a look.

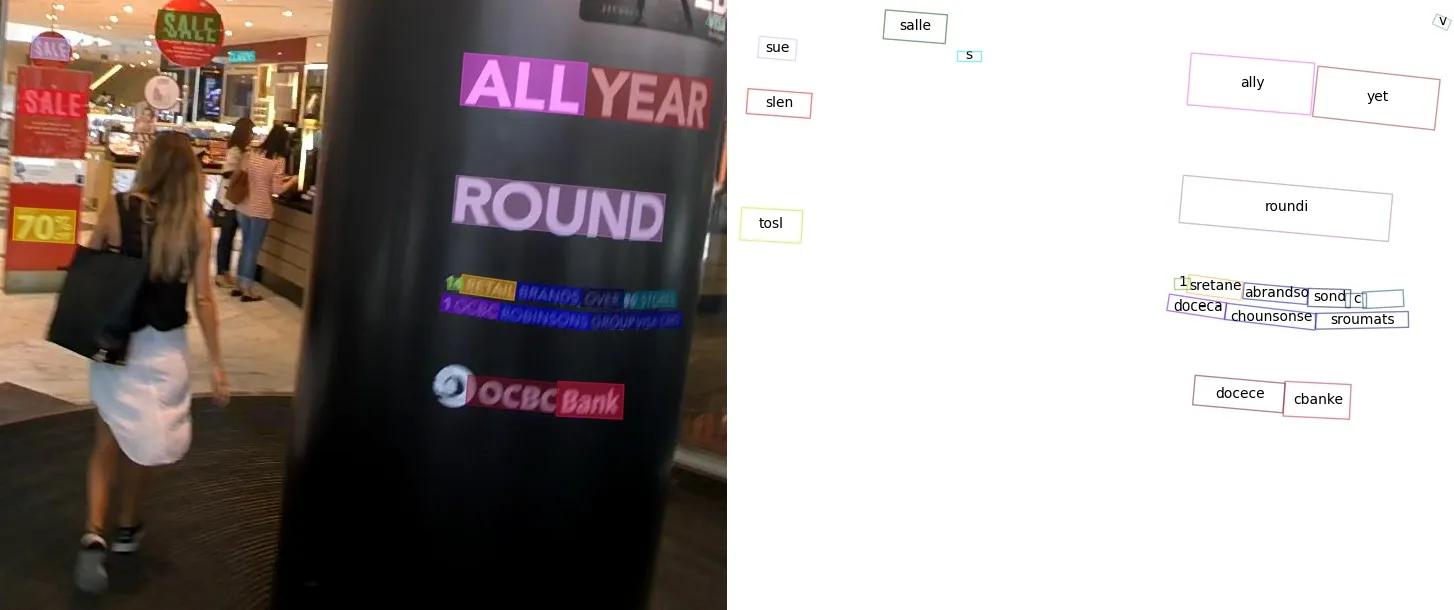

The image above shows the result of MMOCR text detection and recognition. As you can see, MMOCR is able to detect and recognize text at the word level. This is useful for tasks where word-level information is important, such as document analysis and translation.

In this article, we’re using three models from PaddleOCR: text detection, text recognition, and text direction. We use the text detection model to detect text regions in the image, the text recognition model to recognize the text within the detected regions, and the text direction model to determine the orientation of the text. You can download the models from the PaddleOCR website.

# download detection modelwget https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_det_infer.tartar -xvf en_PP-OCRv3_det_infer.tar

# download recognition modelwget https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_rec_infer.tartar -xvf en_PP-OCRv3_rec_infer.tar

# downlaod orientaiton classifier modelwget https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_infer.tartar -xvf ch_ppocr_mobile_v2.0_cls_infer.tarBecause we’re using PaddleOCR, the models are in PaddlePaddle format. Thankfully, PaddlePaddle provides paddle2onnx library to convert PaddlePaddle models to ONNX format. You can easily convert the models with the following command:

# install paddle2onnxpip install paddle2onnx

# convert detection modelpaddle2onnx --model_dir ./en_PP-OCRv3_det_infer \ --model_filename inference.pdmodel \ --params_filename inference.pdiparams \ --save_file ./ocr_det.onnx \ --opset_version 11 \ --input_shape_dict="{'x':[-1,3,-1,-1]}" \ --enable_onnx_checker TrueYou can convert the other two models (recognition and orientation classifier) in the same way. After converting the models to ONNX format, you can use them with ONNX Runtime for inference.

ONNX Runtime

Imagine you have a model in PyTorch, TensorFlow, or PaddlePaddle, and you want to deploy it on a wide range of devices, from CPUs to GPUs to FPGAs. This is where ONNX Runtime comes in. ONNX Runtime is a high-performance scoring engine for Open Neural Network Exchange (ONNX) models.

But what is ONNX model? ONNX is an open format built to represent deep learning models. ONNX defines a common set of operators–the building blocks of deep learning models–and a common file format (.onnx) to enable AI developers to use models with a variety of frameworks, tools, runtimes, and compilers.

In order to run the ONNX model, you only need few lines of code. Here’s an example of how to run the ONNX model with ONNX Runtime:

from typing import List

import numpy as npimport onnxruntime as ort

# load the modelmodel = ort.InferenceSession('ocr_det.onnx')

# prepare the inputinput_name = model.get_inputs()[0].nameinput_data = np.random.rand(1, 3, 640, 640).astype(np.float32)

# run the modeloutput: List[np.ndarray] = model.run(None, {input_name: input_data})In the code above, we load the ONNX model with ort.InferenceSession, prepare the input data, and run the model with model.run. The output of the model is a list of numpy arrays, which you can use for further processing.

Vision-OCR

We’ve created a simple REST API service for OCR using PaddleOCR and ONNX Runtime. You can find the repository for this article at ruhyadi/vision-ocr. The repository contains the code for the OCR engine, as well as a dockerfile for building the API engine.

Please make sure you have Docker installed on your machine. You can pull the docker image from our hub with the following command:

docker pull ruhyadi/vision-ocr:v1.0.0-apiAfter pulling the docker image, you can run the API engine with the following command:

docker run \ -d \ --rm \ --name vision-ocr-api \ --network host \ ruhyadi/vision-ocr:v1.0.0-api \ python src/main.pyThe API engine will be running on http://localhost:4700. You can test the API with the following command:

curl -X 'POST' \ 'http://localhost:4700/api/v1/engine/ocr/snapshot' \ -H 'accept: application/json' \ -H 'Content-Type: multipart/form-data' \ -F 'image=@/PATH/TO/IMAGE.jpg;type=image/jpeg'The API engine will return the OCR result in JSON format with the following structure:

{ "boxes": [ [10, 10, 20, 20, 30, 30, 40, 40], [50, 50, 60, 60, 70, 70, 80, 80] ], "texts": [ "Hello", "World" ], "oris": [ "up", "down", ], "scores": [ 0.99, 0.98 ]}The boxes field contains the bounding boxes of the detected text regions, the texts field contains the recognized text, the oris field contains the orientation of the text, and the scores field contains the confidence scores of the recognition. You can use this information for further processing, such as text extraction or translation.

Conclusion

In this article, we explored the use of PaddleOCR and ONNX to perform OCR on images. We converted the PaddleOCR models to ONNX format and used ONNX Runtime for inference. We also created a simple REST API service for OCR using PaddleOCR and ONNX Runtime.